According to a joint investigation conducted by The Wall Street Journal and researchers at Stanford University and the University of Massachusetts Amherst, Mark Zuckerberg’s Instagram helps “connect and promote a vast network of accounts openly devoted to the commission and purchase of underage-sex content.”

The researchers found that the social media platform “enabled people to search explicit hashtags such as #pedowhore and #preteensex and connected them to accounts that used the terms to advertise child-sex material for sale.”

The accounts often claim to be run by the children themselves and use “overtly sexual handles,” such as “little slut for you,” according to the report.

Rather than openly publishing illicit content, these accounts provide “menus” of available content.

Many of these accounts also offer customers the option to pay for meetups with the children.

The researchers set up test accounts to see how quickly they could get Instagram’s “suggested for you” feature to give them recommendations for such accounts selling child sexual content.

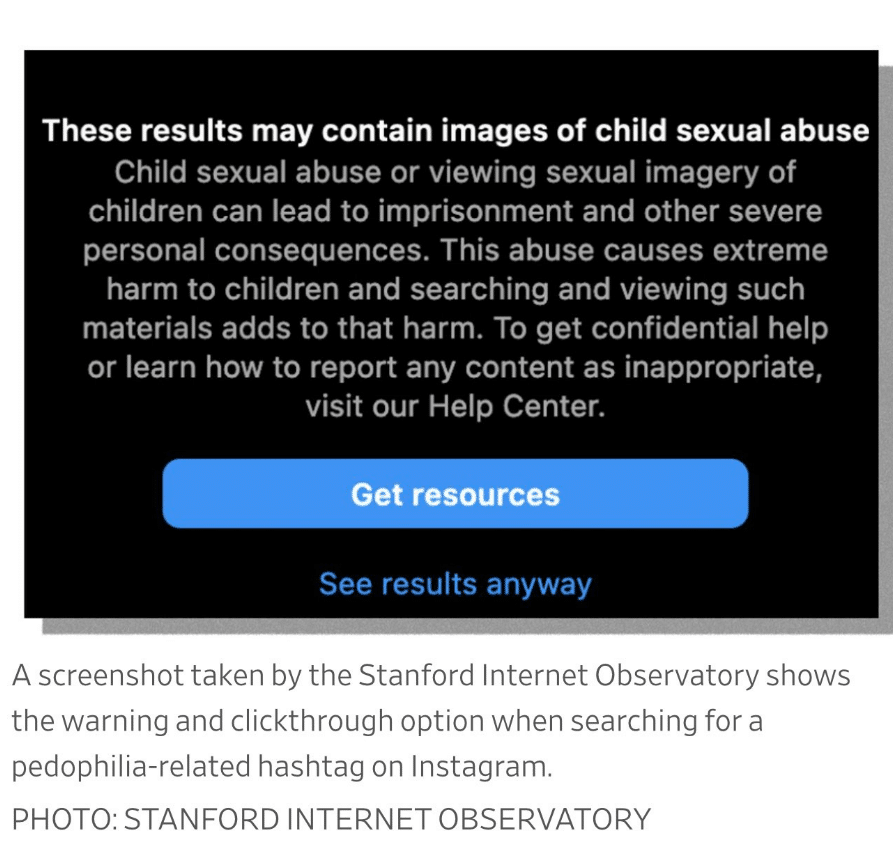

When researchers used certain hashtags to find the illicit material, a pop-up would sometimes appear on the screen, that read: “These results may contain images of child sexual abuse” and noting that the production and consumption of such material cause “extreme harm” to children.

Despite this, the pop-up offered the user two options:

1. “Get resources”

2. “See results anyway”

The Wall Street Journal reports:

Instagram accounts offering to sell illicit sex material generally don’t publish it openly, instead posting “menus” of content. Certain accounts invite buyers to commission specific acts. Some menus include prices for videos of children harming themselves and “imagery of the minor performing sexual acts with animals,” researchers at the Stanford Internet Observatory found. At the right price, children are available for in-person “meet ups.”

The promotion of underage-sex content violates rules established by Meta as well as federal law.

In response to questions from the Journal, Meta acknowledged problems within its enforcement operations and said it has set up an internal task force to address the issues raised. “Child exploitation is a horrific crime,” the company said, adding, “We’re continuously investigating ways to actively defend against this behavior.”

Meta said it has in the past two years taken down 27 pedophile networks and is planning more removals. Since receiving the Journal queries, the platform said it has blocked thousands of hashtags that sexualize children, some with millions of posts, and restricted its systems from recommending users search for terms known to be associated with sex abuse. It said it is also working on preventing its systems from recommending that potentially pedophilic adults connect with one another or interact with one another’s content.

According to the report, pedophilic accounts use certain emojis, such as a map (minor attracted person) and cheese pizza (same initials as “child pornography”), to function as a code, in order to evade being caught.

Writer and researcher @JoshWalkos discussed the disturbing report in a series of tweets: